✍️ Worth to read papers

Published:

Compilation of some papers that I enjoyed reading.

[2504.16929] I‑Con: A Unifying Framework for Representation Learning

Proposes a single equation aiming to unify diverse machine learning approaches: clustering, contrastive learning, supervised learning, and dimensionality reduction. The method is fundamentally based on the idea that each ML model is optimizing a loss function based on the KL divergence between two data distributions, p and q. Where p represents the probability density of the true data and q the learned model distribution.

“Universal” loss function:

\[\mathcal{L}(\theta, \phi) = \int_{i, j \in \mathcal{X}} p_\theta(j \mid i) \log \frac{p_\theta(j \mid i)}{q_\phi(j \mid i)}\]This framework aspires to act as a “periodic table of machine learning”, suggesting that the current gaps within this formulation may inspire new model types or architectural innovations.

![]()

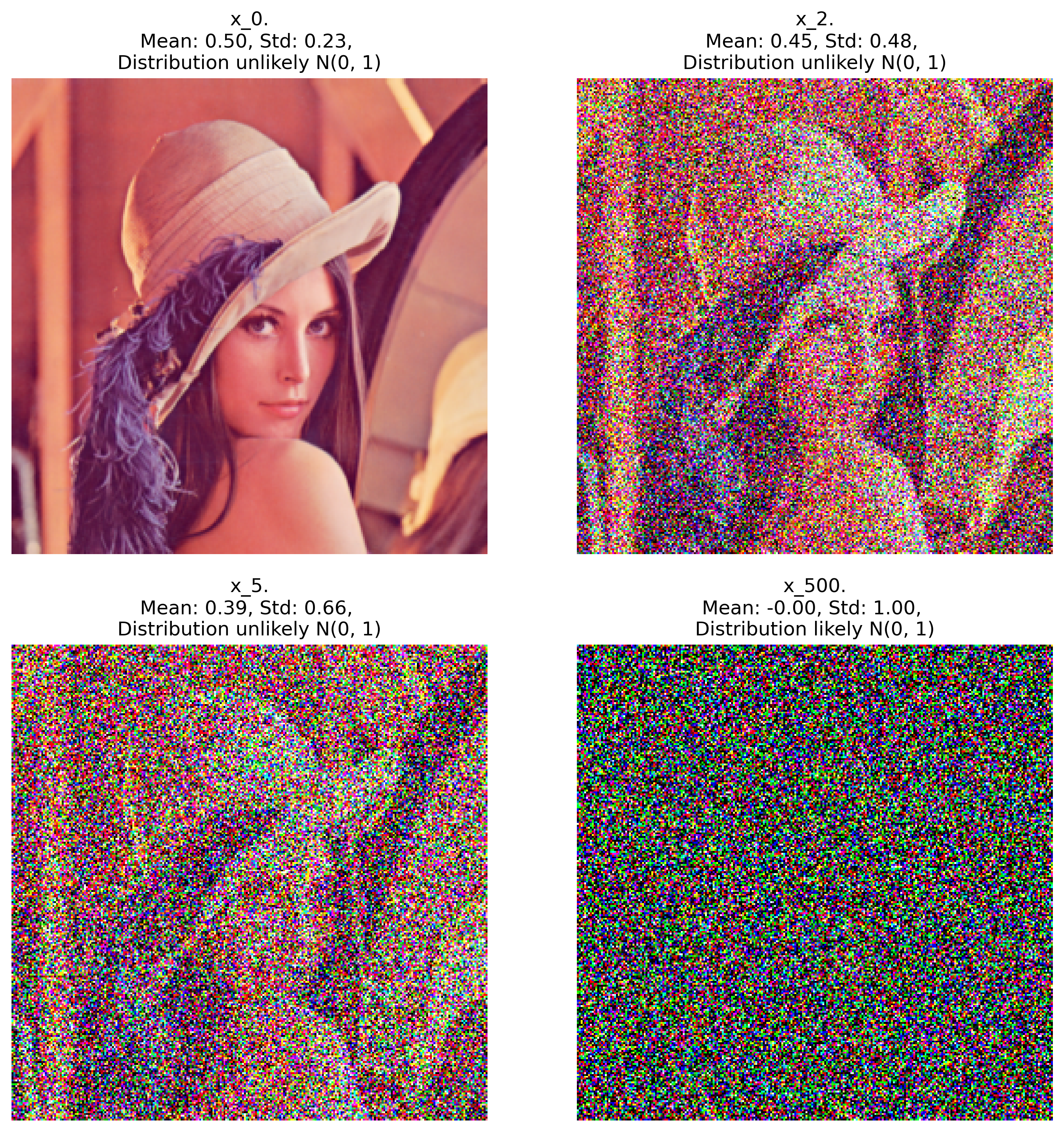

[2006.11239] Denoising Diffusion Probabilistic Models

This is a classic paper in generative models, introduces diffusion models (well, there was a previous paper in 2015, 1503.03585). Diffusion models are trained for removing Gaussian noise, the main idea is a model that gradually transforms a Gaussian noise image into an actual image.

The paper has a strong mathematical background, I have a blog entry with notes of diffusion models.

Example of diffusion, over 500 steps the gaussian noise image becomes to an image

Example of diffusion, over 500 steps the gaussian noise image becomes to an image

[2303.09861] SpiRobs: Logarithmic spiral-shaped robots for versatile grasping across scales

This paper brings a new alternative to classical robotic arms, robotic tentacles based on logarithm spirals.